As we are entering the agentic era of highly-connected AI systems, relying on multi-turn tool use to achieve complex workflows, the security of these systems has never been more important. This is why today, we are releasing Invariant Guardrails, our state-of-the-art guardrailing system for MCP and agentic AI applications. Guardrails is designed to provide a robust and flexible framework for ensuring the safety and reliability of AI agents, with a focus on contextual guardrailing.

Guardrails is a transparent security layer located at the LLM and MCP-level, enabling agent builders and security engineers to augment existing agentic models, with a set of expressive deterministic rules, going beyond simple system prompting:

Guardrailing Rules

from invariant.detectors import secrets

raise "Leaked API secret" if:

(msg: Message)

any(secrets(msg.content))

from invariant.detectors import pii

raise "Found PII in message" if:

(msg: Message)

any(pii(msg))

raise "PII leakage in email" if:

(out: ToolOutput) -> (call: ToolCall)

any(pii(out.content))

call is tool:send_email({ to: "^(?!.*@ourcompany.com$).*$" })

raise "Email to Alice is disallowed" if:

(call: ToolCall)

call is tool:send_email({ to: "[email protected]" })

from invariant.detectors.code import python_code

raise "'eval' must not be used" if:

(msg: Message)

program := python_code(msg.content)

"eval" in program.function_calls

from invariant.detectors import copyright

raise "Found copyrighted code" if:

(msg: Message)

not empty(copyright(msg.content, threshold=0.75))

from invariant import count

raise "Too many retries" if:

(call1: ToolCall)

call1 is tool:check_status

count(min=2, max=10):

call1 -> (other_call: ToolCall)

other_call is tool:check_status

Different from traditional LLM security, Guardrails enables you to impose contextual rules on your AI systems, such as data flow requirements, if-this-then-that patterns and tool call restrictions.

Invariant Guardrails are enforced by Gateway, Invariant's transparent MCP and LLM proxy. This means, Invariant can be deployed within minutes, to your existing agent and AI systems, merely by changing the base URLs for LLM and MCP interaction.

Context Is Key

Different from traditional LLM security, Guardrails enables you to impose contextual rules on your AI systems, such as data flow requirements, if-this-then-that patterns and tool call restrictions. In the agentic era, AI security no longer reduces to the simple question about malicious prompts, but becomes a complex, organization-specific set of rules, that needs to be enforced across the entire system.

Guardrails enables you to specify complex flow-based rules, controlling data flows from important internal systems to untrusted, public sinkholes like untrusted web pages, emails or other external systems.

For instance, the following Guardrail policy ensures that no PII is leaked to an external email address:

raise "External email to unknown address" if:

# detect flows between tools

(call: ToolCall) -> (call2: ToolCall)

# check if the first call obtains the user's inbox

call is tool:get_inbox

# second call sends an email to an unknown address

call2 is tool:send_email({

to: ".*@[^ourcompany.com$].*"

})

Of course, it doesn't end here. Rules can be customized to your needs, extending to prompt injections, tool call restrictions, data flow control, content moderation patterns, loop detection and much more.

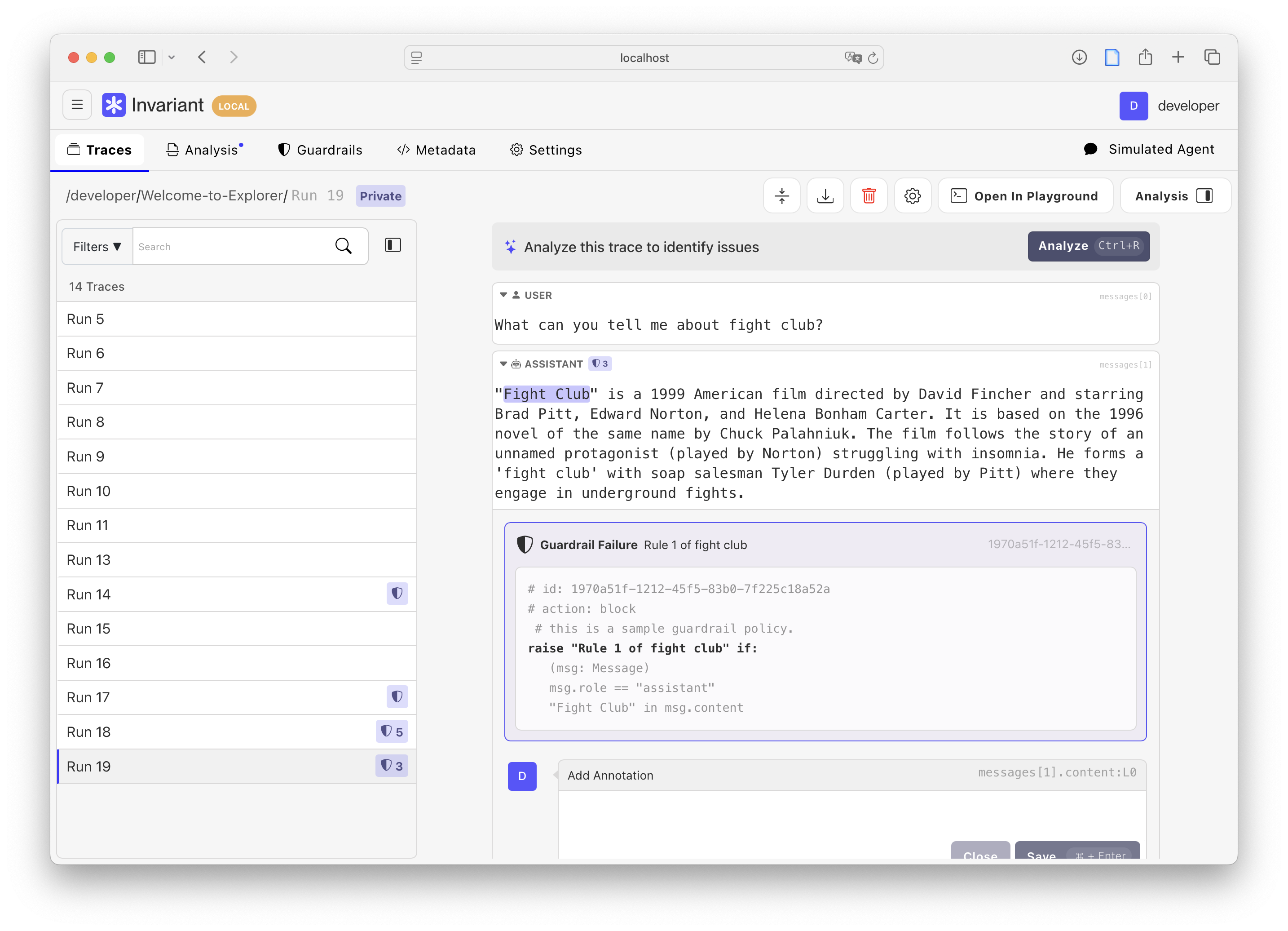

Built-In Observability

Next to Guardrails, Invariant Explorer provides built-in observability for your Invariant-augmented AI system, allowing for configuration, testing and monitoring of your guardrails.

Explorer provides a powerful interface for testing and debugging your guardrails, allowing you to simulate different scenarios and see how your guardrails respond. This is especially useful for fine-tuning your guardrails and ensuring that they are working as intended.

Open Source for Transparency and Control

Guardrails is open source and available on GitHub. This allows you to inspect, audit and modify the guardrails code to your needs.

Please feel free to open issues and give feedback via the repository. We are looking forward to the feedback from the community.

Getting Started

To get started with Guardrails, you can sign up for Explorer and start guardrailing your agentic AI applications in minutes. For custom dedicated deployments, you can also contact us directly.

You can also start by reading the documentation or by playing with examples in our interactive Guardrails Playground.

If you want to jump right in, you can also check one of the following highlighted documentation chapters and use-cases, to learn more about how to use Guardrails: