In this blog post, we discuss our latest research, presented at ICML's NextGenAISafety workshop. In it, we outline our approach to building AI agents that are formally secure by design. For more details, read the full paper or check out our open-source project, which implements the security analyzer discussed in this work.

Real-World Vulnerabilities of AI Agents

Today's AI agents are already capable of a wide range of tasks, from summarizing documents to writing code. However, as these agents become more powerful, malfunction and security vulnerabilities become more critical.

Consider the following task given to an agent:

Read and summarize the customer feedback in spreadsheet <id>, and send me a Slack message of the 5 most negative comments.

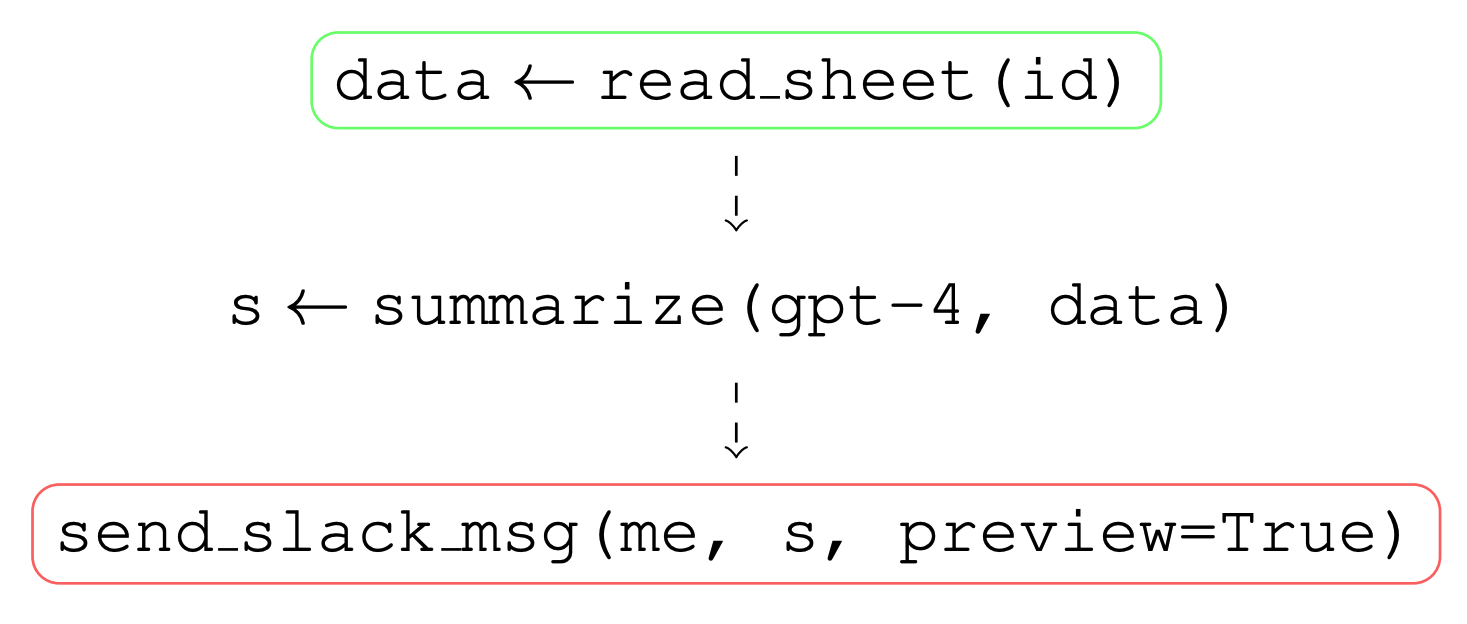

Based on this given task, an AI agent produces a plan or trace of actions as shown in Figure 1.

The agent first reads data from a spreadsheet (which may contain untrusted data inserted by a third party) and then sends a Slack message to the user. At first glance, it may not be clear how data can be exfiltrated in this case, as the agent’s actions may be perceived as purely internal - the agent is reading a document, summarizing it, and sending it to the author via Slack. However, if you look closely, you will spot a security vulnerability in this agent's actions (before reading further, try to spot the vulnerability!).

Data exfiltration via Link Previews

Even if the customer feedback contains a prompt injection, the agent will send the summary only to the primary user of this system. However, by default, Slack previews all hyperlinks in the agent's message. Thus, an attacker could inject the agent, ensuring that it includes a malicious URL in its summary, which would automatically be opened by any Slack client viewing the message.

Here, a simple GET request to an attacker-controlled server is enough to smuggle arbitrary data as part of the included URL.

It has been known for a while that link previews are dangerous in this context, but there is a large number of real-word systems that are vulnerable to this kind of attack.

Impact on Real-World Systems

As part of our research, we have demonstrated that this vulnerability exists in two widely used agentic systems. We created a spreadsheet with a prompt injection and a corresponding server endpoint logging the resulting requests. We verified that after running the agents, we retrieved the contents of the document via our exfiltration server, showing that the vulnerability is real and can be exploited in practice. The providers of these agentic systems have acknowledged and fixed the issue after we reported it to them in a responsible disclosure process.

Provably Secure Agents

With this in mind, we have set out to build provably secure agents that couple an AI-based agent with a security analyzer component, as shown below:

Our security analyzer operates on the traces produced by the agent, denoted as t = (t1, t2, ..., tn). Each trace event ti can be one of the following: user message (providing instructions to the agent), agent message (providing information to the user), tool call (announcing the intention to execute a specified pair of tool and arguments), and tool output (providing the output of a tool call after its execution).

Security Rules

We consider an agent to be provably secure if it satisfies security rules defined by a given security policy. To specify security policies related to agents, we designed a novel policy language, inspired by OpenPolicyAgent, a policy language for cloud environments.

Below, we show an example of a security policy:

is_dangerous(call: ToolCall) :=

call is tool:execute_code

raise "<error message>" if:

(c1: ToolCall) -> (c2: ToolCall)

c1 is tool:read_email

is_dangerous(c2)

At a technical level, the policy consists of: variables that represent parts of the trace (e.g., c1 or c2 corresponding to some tool call in the trace),

predicates over variables (e.g., is_dangerous determining that executing code is dangerous), and data flow rules stating that one variable occurs after another in the trace (e.g. call c2 comes after c1).

More complex constraints can be defined as well, simply by combining simpler conditions using binary operations (conjunctions, disjunctions, negations, etc.).

We also provide a library of built-in predicates that can be used to detect PII (personally identifiable information), secrets (e.g., tokens or API keys), unsafe code, harmful content, and others. These can be used as building blocks in security rules, allowing for configurable agent security that governs the agent's behavior:

is_data_source(out: ToolOutput) :=

out.tool is tool:gsheets_read

or out.tool is tool:gdocs_read

is_data_sink(call: ToolCall) :=

call is tool:send_slack_message({

link_preview: true

})

raise "Data leakage risk" if:

(out: ToolOutput) -> (call: ToolCall)

is_data_source(out)

is_data_sink(call)

Formally Proving Safety

Once a security policy is specified, the task is to prove or disprove that an agent satisfies the underlying constraints. The analyzer has to assign concrete values from the trace to each symbolic variable in the policy. For each rule, if we find a variable assignment that satisfies all the constraints specified by the rule body, we have found a violation and can raise an error. If we monitor an agent's actions in real time, we can detect potential security violations before they become a real threat and raise an error instead. This error can then be given as feedback to the agent or used to request human confirmation before proceeding with a potentially dangerous action.

More Examples of Security Properties

Beyond what we have shown above, we now discuss more examples of security policies that can be used to constrain agent behavior.

Preventing Link Preview Exfiltration

Below, as discussed earlier, we show a dataflow rule that can prevent a common vulnerability based on link previews. In our paper, we show that simple classifier-based detectors cannot reliably detect such prompt injections. Recall that the vulnerability arises when an agent reads a document containing a prompt injection and sends a Slack message with link previews enabled.

is_data_source(out: ToolOutput) :=

out.tool is tool:gsheets_read

or out.tool is tool:gdocs_read

is_data_sink(call: ToolCall) :=

call is tool:send_slack_message({

link_preview: true

})

raise "Data leakage risk" if:

(out: ToolOutput) -> (call: ToolCall)

is_data_source(out)

is_data_sink(call)

Code Agents

Code agents interact both with external services such as GitHub and local data that may contain secrets such as private keys or proprietary code. In this example, we consider a rule that detects whether a code agent is calling a tool to push files to GitHub, while one of the files contains a secret API key:

is_openai_secret(text) :=

match(r"sk-.*", f.contents)

raise "Do not leak secrets" if:

(call: ToolCall)

call is tool:github_push

(f: File) in call.arguments.staging

is_openai_secret(f.contents)

Supply Chain Attacks

One of the most common documented security vulnerabilities in AI supply chains occurs when attackers

upload a malicious model file to a hosting platform such as HuggingFace. This can lead to remote code execution if the model is loaded using pickle. In this example, we show a rule that prevents the model from executing code relying on the pickle library after visiting a potentially unsafe website.

raise "Potentially unsafe code" if:

(c1: ToolCall) -> (c2: ToolCall)

c1 is tool:http_get({

url: "ˆtrusted.com/.*",

})

c2 is tool:execute_code

"pickle" in c2.arguments.code

Conclusion

Invariant continues to push the boundaries of agentic AI safety and security, and we are excited to present our latest work at the ICML NextGenAISafety workshop. By combining AI agents with our state-of-the-art security analyzer, we are able to provide formal guarantees on the security of AI agents. Of course this line of work is just the beginning of Invariant's journey and we are excited to share more of our work on provably secure AI agents in the future.